All developers and database administrators (DBAs) have been in a situation where they need to test database deployment before going live. There’s no way around it. Want to be live? Test your environment in a sandbox before moving onto publicly visible spaces (e.g., the web, etc.).

But have you ever wondered what DBAs need to consider before deploying their database environments both locally and live? We will tell you all about that in this blog post.

Local Database Deployment

Neither developers nor higher-tier DBAs have many worries when deploying databases locally. Perhaps they worry about configuration, but not much else. With local deployment, you already know all of the parameters of your system, so the only thing you need to do is allocate them appropriately. Literally – that‘s it! If you’re running MySQL, just open up my.cnf, set your parameters to use 60-80% of RAM, exit WAMP, start it again, and that’s it. Not that hard!

To deploy a database, you simply use phpMyAdmin or log in through the CLI and run a couple of CREATE DATABASE queries (advanced DBAs might even have scripts that accomplish all of these tasks for them). Select your collation, set your database storage engine in my.cnf (e.g., InnoDB or XtraDB by default), and you’re off to a good start.

Operating in a live environment introduces significantly more complexity than working locally. Live environments demand meticulous attention and maintenance, requiring a thorough evaluation of all server aspects before making adjustments. As users or customers begin to interact with your systems, the stakes are higher, and reactive changes are often insufficient to address issues effectively. Proactive planning and optimization are essential to ensure smooth operations and prevent disruptions in a live setting.

Deploying Databases in a Live Environment

Developers and DBAs deploying databases in a live environment need to consider the following:

- Customer base size: Large customer bases mean that even short downtime could cost thousands.

- Server usage distribution: Deploy databases on underutilized servers to balance the load.

- Necessity of additional databases: Ensure additional databases are justified and meet regulatory requirements.

Carefully consider these questions before deploying a database:

- Who? Who is responsible for deploying the database, and who will it serve?

- What? What is the intended function and purpose of the database?

- Why? Why is the database being deployed, and what objectives does it fulfill?

The answers to these questions will help determine whether deploying the database is the right decision for your use case. If the rationale for deployment is sound, proceed with confidence. However, before deployment, it’s essential to review and adjust configuration files to optimize performance based on your system’s specifications. Evaluate critical factors such as available RAM, storage capacity, and CPU capabilities. Set parameters accordingly to maximize efficiency. If determining these settings is challenging, consider automating database processes to streamline and enhance the deployment process.

Automating Processes in Deployment

“Decide what parameters are you going to set and to what values” – as if it were that easy. 1, 2, 3, done? No. This step requires careful consideration. One step in the wrong direction and your database is in ruins. Thankfully, there are tools that can assist you in this realm.

Automating database processes ensures stability and efficiency. dbWatch Control Center supports various database systems like MySQL, MariaDB, PostgreSQL, Oracle, Sybase, and SQL Server. It allows users to automate tasks such as performance checks, replication, and security updates.

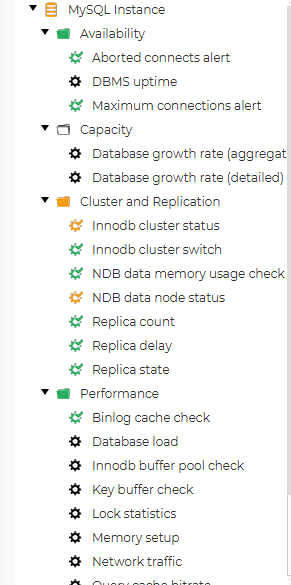

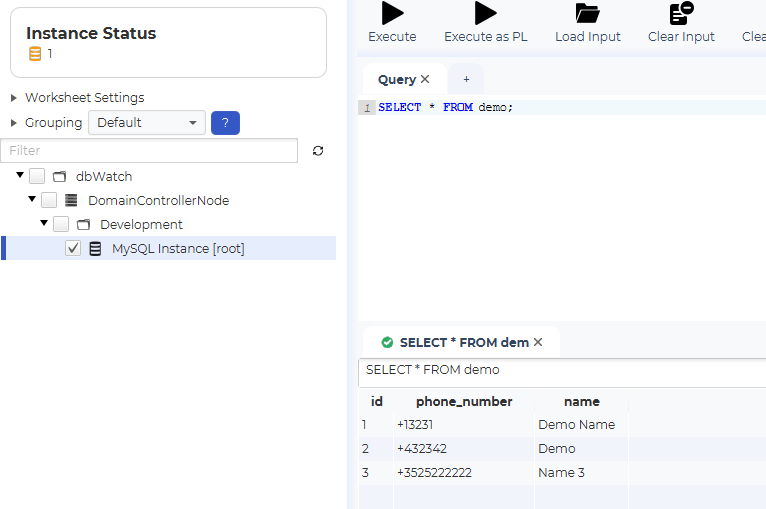

Here’s an example of how MySQL jobs appear in dbWatch:

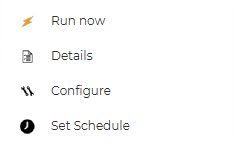

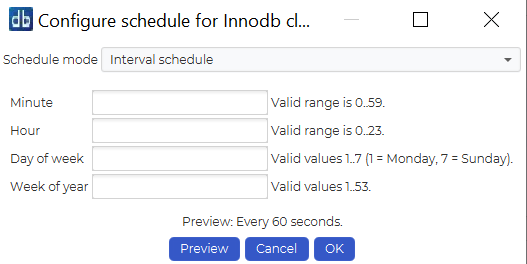

To automate a job, right-click, select “Set Schedule,” and configure the job:

Database Farm Management

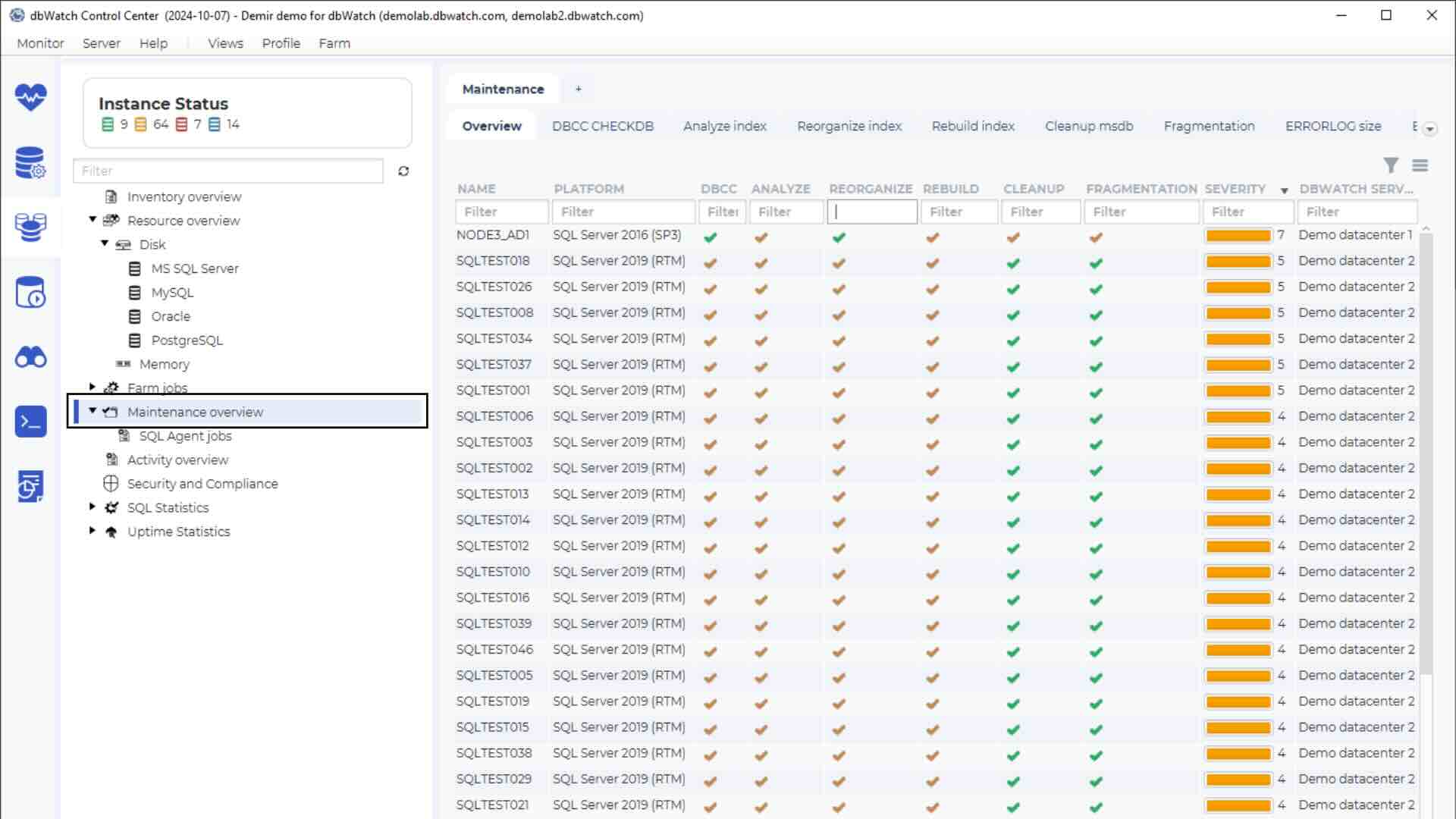

Use the Database Farm feature in dbWatch to monitor job statuses and address warnings or alarms as needed:

dbWatch Control Center helps streamline deployment in local or live environments, offering automation, monitoring, and troubleshooting tools.

Discover the impact dbWatch can make on your organization. Download a trial version to monitor up to 5 instances for 6 months.